Example 26: Wrapper-based (normal Bayes) feature selection with Floating Search, verified by 2 classifiers. More...

#include <boost/smart_ptr.hpp>#include <exception>#include <iostream>#include <cstdlib>#include <string>#include <vector>#include "error.hpp"#include "global.hpp"#include "subset.hpp"#include "data_intervaller.hpp"#include "data_splitter.hpp"#include "data_splitter_5050.hpp"#include "data_splitter_cv.hpp"#include "data_scaler.hpp"#include "data_scaler_void.hpp"#include "data_accessor_splitting_memTRN.hpp"#include "data_accessor_splitting_memARFF.hpp"#include "criterion_wrapper.hpp"#include "distance_euclid.hpp"#include "classifier_knn.hpp"#include "classifier_normal_bayes.hpp"#include "seq_step_straight.hpp"#include "search_seq_sffs.hpp"

Functions | |

| int | main () |

Example 26: Wrapper-based (normal Bayes) feature selection with Floating Search, verified by 2 classifiers.

| int main | ( | ) |

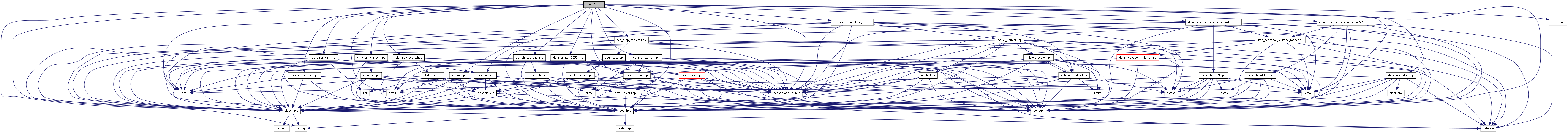

Floating Search (SFFS or SBFS) can be considered one of the best compromises between versatility, speed, ability to find close-to-optimum results, and robustness against overfitting. If in doubt which feature subset search method to use, and the dimensionality of your problem is not more than roughly several hundred, try SFFS. In this example features are selected using SFFS algorithm and normal Bayes wrapper classification accuracy as FS criterion. Classification accuracy (i.e, FS wrapper criterion value) is estimated on the first 50% of data samples by means of 3-fold cross-validation. The final classification performance on the selected subspace is eventually validated on the second 50% of data using two different classifiers. SFFS is called in d-optimizing setting, invoked by parameter 0 in search(0,...), which is otherwise used to specify the required subset size.

References FST::Search_SFFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::get_result(), FST::Search_SFFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::search(), FST::Search< RETURNTYPE, DIMTYPE, SUBSET, CRITERION >::set_output_detail(), and FST::Search_SFFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::set_search_direction().

1.6.1

1.6.1