Example 50: Voting ensemble of criteria. More...

#include <boost/smart_ptr.hpp>#include <exception>#include <iostream>#include <cstdlib>#include <string>#include <vector>#include "error.hpp"#include "global.hpp"#include "subset.hpp"#include "data_intervaller.hpp"#include "data_splitter.hpp"#include "data_splitter_5050.hpp"#include "data_splitter_cv.hpp"#include "data_scaler.hpp"#include "data_scaler_void.hpp"#include "data_accessor_splitting_memTRN.hpp"#include "data_accessor_splitting_memARFF.hpp"#include "criterion_wrapper.hpp"#include "distance_euclid.hpp"#include "classifier_knn.hpp"#include "seq_step_ensemble.hpp"#include "search_seq_sfs.hpp"

Functions | |

| int | main () |

Example 50: Voting ensemble of criteria.

| int main | ( | ) |

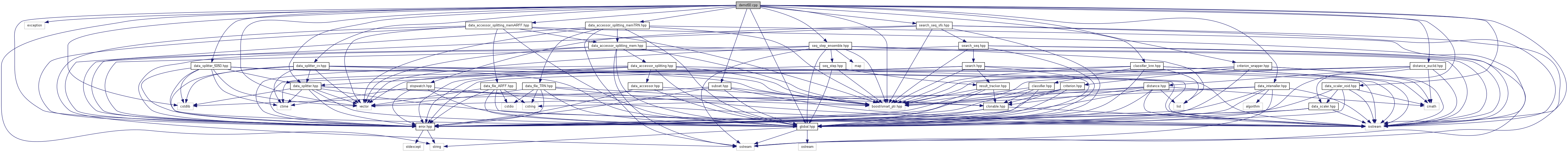

In this example features are selected by means of voting of three criteria: 1-NN, 5-NN and 9-NN classifier wrappers (estimated classification accuracy). The various criteria vote about feature candidates in each sequential search algorithm step. To enable voting, each criterion builds a list of feature candidates, ordered descending according to criterion value. Feature candidate positions in all lists are then joined (averaged) and the candidate with best average position (i.e., most universal preference) is selected for addition/removal to/from the current working subset. The three k's in k-NN should cover feature preferences with various (over)sensitivity to detail, resulting possibly in more robust subset (less coupled with particular classifier). The example setup requires one primary criterion to be used in standard FST3 way to guide sequential search algorithm steps; here 3-NN wrapper is chosen for this purpose. First 50% of data is used for training, second 50% is chosen to be used at the end for validating the classification performance on the finally selected subspace. Classification accuracy (wrapper value) is evaluated on the training data by means of 3-fold cross-validation. SFS is called in d-optimizing setting, invoked by parameter 0 in search(0,...), which is otherwise used to specify the required subset size.

References FST::Search_SFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::search(), FST::Search< RETURNTYPE, DIMTYPE, SUBSET, CRITERION >::set_output_detail(), and FST::Search_SFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::set_search_direction().

1.6.1

1.6.1