Example 21: Generalized sequential feature subset search. More...

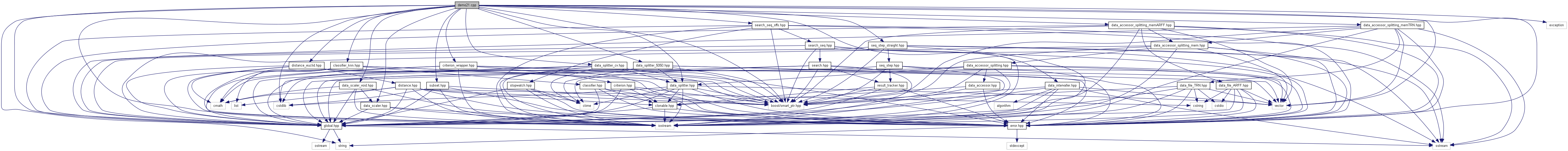

#include <boost/smart_ptr.hpp>#include <exception>#include <iostream>#include <cstdlib>#include <string>#include <vector>#include "error.hpp"#include "global.hpp"#include "subset.hpp"#include "data_intervaller.hpp"#include "data_splitter.hpp"#include "data_splitter_5050.hpp"#include "data_splitter_cv.hpp"#include "data_scaler.hpp"#include "data_scaler_void.hpp"#include "data_accessor_splitting_memTRN.hpp"#include "data_accessor_splitting_memARFF.hpp"#include "criterion_wrapper.hpp"#include "distance_euclid.hpp"#include "classifier_knn.hpp"#include "seq_step_straight.hpp"#include "search_seq_sffs.hpp"

Functions | |

| int | main () |

Example 21: Generalized sequential feature subset search.

| int main | ( | ) |

All sequential search algorithms (SFS, SFFS, OS, DOS, SFRS) can be extended to operate in "generalized" setting (term coined in Devijver, Kittler book). In each step of a generalized sequential search algorithm not only one best feature is added to current subset nor one worst feature is removed from current subset; instead, g-tuples of features are considered. Searching for such group of g features that improves the current subset the most when added (or such that degrades the current subset the least when removed) is more computationally complex but increases the chance of finding the optimum or a result closer to optimum (nevertheless, improvement is not guaranteed and in some cases the result can actually degrade). The value g is to be set by user; the higher the value g, the slower the search (time complexity increases exponentially with increasing g). Note that setting g equal to the number of all features would effectively emulate the operation of exhaustive search. In this example features are selected using the generalized (G)SFFS algorithm (G=2) and 3-NN wrapper classification accuracy as FS criterion. Classification accuracy (i.e, FS wrapper criterion value) is estimated on the first 50% of data samples by means of 3-fold cross-validation. The final classification performance on the selected subspace is eventually validated on the second 50% of data. (G)SFFS is called here in d-optimizing setting, invoked by parameter 0 in search(0,...), which is otherwise used to specify the required subset size.

References FST::Search_SFFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::get_result(), FST::Search_SFFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::search(), FST::Search_Sequential< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::set_generalization_level(), FST::Search< RETURNTYPE, DIMTYPE, SUBSET, CRITERION >::set_output_detail(), and FST::Search_SFFS< RETURNTYPE, DIMTYPE, SUBSET, CRITERION, EVALUATOR >::set_search_direction().

1.6.1

1.6.1